For uses cases in an image recognition context, LIMPID develops test scenarios and evaluation metrics to properly measure the local reliability of classifiers with a reject option.

Devising new methods to model’s prediction reliability, including the ability to abstain from making a decision, and to match reliability tools with relevant legal/ethical requirements

For uses cases in an image recognition context, LIMPID develops test scenarios and evaluation metrics to properly measure the local reliability of classifiers with a reject option.

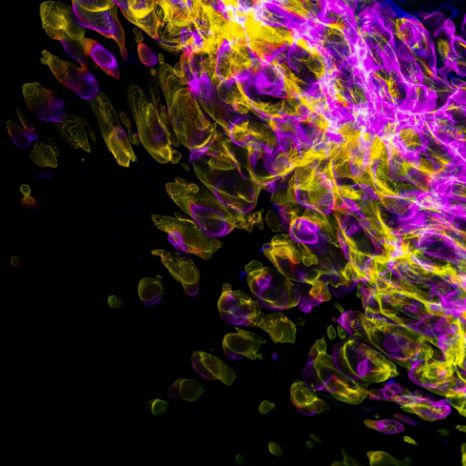

Our generic use case #1 in an image recognition context provides a framework to measure reliability and insert safeguards to prevent low-confidence decisions, in coordination with legal/ethical compliance. It also allows to assess a model’s confidence level or prediction reliability, and to give the decision tool the ability to abstain from making a decision in appropriate cases, an indispensable feature of trustworthy AI.

Use case #1 is an unbalanced problem where the alarm should be raised for the minority class. Adding to this problem, the images requiring a decision could be blurred by weather conditions or partially occluded and no decision could be taken with enough confidence. To avoid numerous false alarms, and potential violations of individual rights, it is mandatory to measure precisely the confidence of the decision and avoid decisions where confidence is not high enough.

Incorporating this level of detail into reliability diagnosis is novel. The tools designed here serve to witness the impact of all the approaches developed in the robustness, fairness and explainability studies, which contribute to strengthen reliability.

A trustworthy classifier should be able to indicate whether its prediction is reliable or not, e.g. when it is preferable to refrain using the model’s prediction and give the hand to a human, particularly where the result is the issuance of a traffic fine. Several cases should necessitate an abstention at test time: in-distribution outliers, data coming from a rare class, out-of-distribution data and highly noisy data. It is important to stress that each of these cases may call for a different pre or post processing: for instance, if a subpopulation is underrepresented at training time, a sample from this subpopulation can be considered at test time as an indistribution outlier and should cause an alarm. The problem is well identified and related to biased training samples. It is also addressed in our contribution on the fairness side. Out-of-distribution data may result from the drift of a distribution (covariate shift) and requires some form of transfer learning. Highly noisy data may be passed through a denoiser before entering into the decision process.

We review local reliability properties for the generic use case #1 in light of relevant legal and ethical requirements, identify gaps and improve where necessary. Post-training confidence methods constitute the baselines to which the new methods developed in this context are compared. A framework for empirically testing and assessing abstention mechanisms is set up, and evaluation metrics and test scenarios are exploited in this context. Moreover, the improvements brought from our studies on fairness and explainability are assessed in terms of local reliability.

A special attention is paid on the counterpart of local reliability, which is local robustness.

Indeed, a model should also be robust to a small transformation of the input whether it is adversarial or it results from the acquisition context. A too stringent abstention mechanism will severely damage accuracy. An abstention mechanism should also respect some form of robustness up to some level.

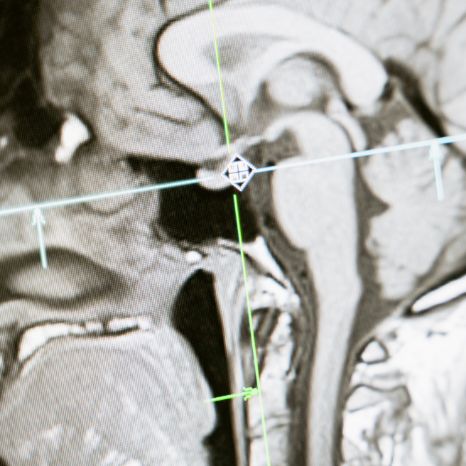

Deep convolutional neural networks (DCNN) for face encoding learn how to project images of faces in a high dimension space. This projection should not put all the blurry images or non-faces in the same part of the space or it would lead to high impostor scores for images from this category. For noisy images (blur, occlusion…), the influence on DCNN has been widely studied like in (Hendrycks et al 2019). However, for face recognition, which is a projection problem and not a classification problem, the influence of blur on the position in the N-dimension and loss function to constrain this position has not been widely studied.

Methods for model’s prediction reliability divide into two categories:

Many works (Corbiere et al. 2019, Wang et al. 2017) have reported that using the confidence measure reported by the classifier itself relying on posterior probabilities provided by neural networks (even calibrated) fails to cover all the cases listed above. A higher confidence measure from the model itself does not necessarily imply a higher probability that the classifier is correct (Nguyen et al. 2015).

A promising framework consists of incorporating a reject option within the design of the predictive model, either as an additional “reject” class or more interestingly as a separate function. This framework takes its roots in the early works of Chow (1970) that emphasized that prediction with rejection is governed by the trade-off between error rate and rejection rate, e.g. accuracy versus coverage. However learning a classifier with an additional reject class boils down to focus only on the boundary between real classes.

A more general framework, learning with abstention (LA), has emerged from the work of (Cortes et al., 2016)Cortes, Corinna & DeSalvo, Giulia & Mohri, Mehryar Learning with Rejection

() Springer International Publishing. doi:10.1007/978-3-319-46379-7_5 that differs from the confidence-based approach. In LA, a pair of functions, one for prediction, one for abstention, are jointly learned to minimize a loss that takes into account the price of abstaining and the price of misclassification. This approach developed so far in the case of large margin classifiers, boosting and structured prediction (Garcia et al. 2018) has been explored very recently in the context of deep neural networks (Croce et al. 2018) while the recent ConfidNet (Corbiere et al. 2019) also combine close ideas with confidence estimation. Interestingly the loss function proposed in LA has not been yet re-visited under the angle of robustness by design nor tested on the different abstention cases listed above.

Based on the general framework developed, and on the assessments of local reliability properties, we design a learning with abstention approach devoted to deep neural networks with new criteria leveraging robustness as well as reliability.

Robustness and bias are very challenging given the limitations inherent in the facial recognition training data sets. Face recognition algorithms are trained on public datasets containing millions of images and hundreds of thousands of identities, but these datasets are strongly biased in identities and age distribution. This leads to variations of performance depending on the quality of the image, and possibly also on individual characteristics such as skin color or gender.

Chow 1970 C. Chow, “On optimum recognition error and reject tradeoff,” IEEE Transactions on Information Theory, vol. 16, no. 1, pp. 41–46, 1970, https://ieeexplore.ieee.org/document/1054406.

Corbiere et al. 2019, Corbière, Charles, Nicolas THOME, Avner Bar-Hen, Matthieu Cord, et Patrick Pérez. « Addressing Failure Prediction by Learning Model Confidence ». In Advances in Neural Information Processing Systems, édité par H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alché-Buc, E. Fox, et R. Garnett, Vol. 32. Curran Associates, Inc., 2019. https://proceedings.neurips.cc/paper/2019/file/757f843a169cc678064d9530d12a1881-Paper.pdf

Cortes and col., 2016 C. Cortes, G. DeSalvo, and M. Mohri, “Learning with rejection,” 2016. https://cs.nyu.edu/~mohri/pub/rej.pdf

Croce et al. 2018, Croce, Daniele, Michele Gucciardo, Stefano Mangione, Giuseppe Santaromita, et Ilenia Tinnirello. « Impact of LoRa Imperfect Orthogonality: Analysis of Link-Level Performance ». IEEE Communications Letters 22, no 4 (2018): 796‑99. https://doi.org/10.1109/LCOMM.2018.2797057.

Garcia et al. 2018 A. Garcia 0001, C. Clavel, S. Essid, and F. d’Alché-Buc, “Structured Output Learning with Abstention: Application to Accurate Opinion Prediction,” in Proceedings of the 35th International Conference on Machine Learning, 2018, pp. 1681–1689. http://proceedings.mlr.press/v80/garcia18a.html

Hendrycks et al 2019, Hendrycks, Dan, Mantas Mazeika, Saurav Kadavath, et Dawn Song. « Using Self-Supervised Learning Can Improve Model Robustness and Uncertainty ». In Advances in Neural Information Processing Systems, édité par H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alché-Buc, E. Fox, et R. Garnett, Vol. 32. Curran Associates, Inc., 2019. https://proceedings.neurips.cc/paper/2019/file/a2b15837edac15df90721968986f7f8e-Paper.pdf.

Nguyen et al. 2015, A. Nguyen, J. Yosinski, and J. Clune, “Deep Neural Networks Are Easily Fooled: High Confidence Predictions for Unrecognizable Images,” Jun. 2015.

Wang et al. 2017 T. Wang, C. Rudin, F. Doshi-Velez, Y. Liu, E. Klampfl, and P. MacNeille, “A Bayesian Framework for Learning Rule Sets for Interpretable Classification,” Journal of Machine Learning Research, vol. 18, no. 70, pp. 1–37, 2017.