Current anti-money laundering (AML) techniques violate fundamental rights and AI would make things worse

09 July 2020

In a new paper, we have analyzed current AML systems as well as new AI techniques to determine whether they can satisfy the European fundamental rights principle of proportionality, a principle that has taken on new meaning as a result of the European Court of Justice’s Digital Rights Ireland and Tele2 Sverige – Watson cases.

In those cases, the CJEU drastically limited government’s ability to impose data processing obligations on private entities for the purpose of aiding law enforcement. According to the Court, general and indiscriminate processing of all customer data is disproportionate. Moreover, intrusive processing due to the nature of the data can only be imposed for serious crimes. These proportionality criteria are also embodied in several articles of the GDPR relevant for AML processing.

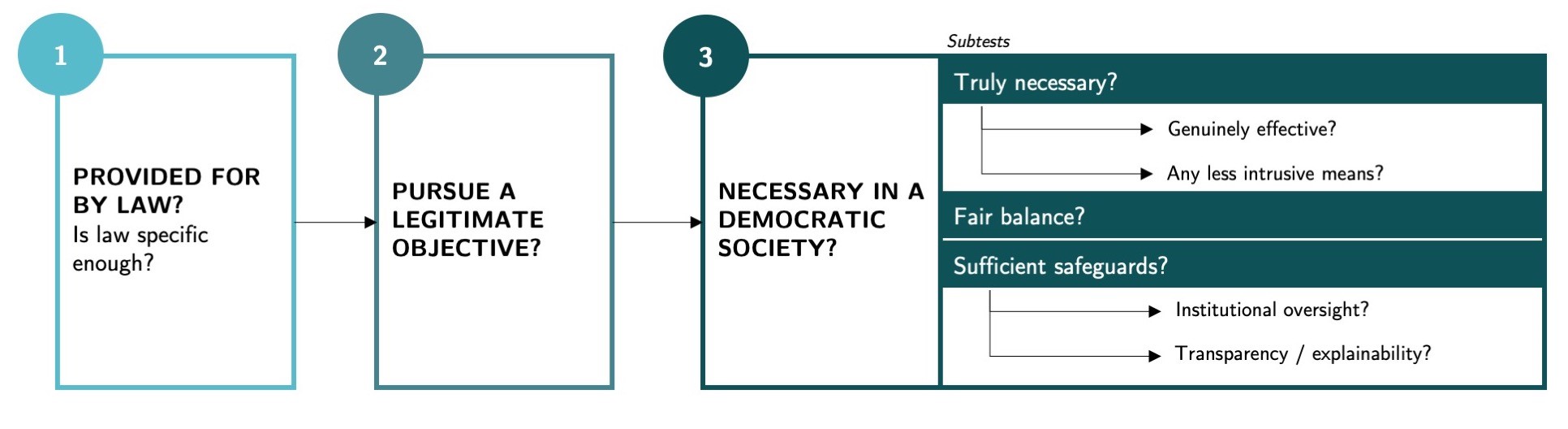

The question we addressed is whether proportionality requirements can be satisfied by AI-powered AML systems. To conduct our analysis we broke the proportionality test down into its various components, including the question of whether government-imposed AML measures are “necessary in a democratic society”. This involves asking whether the measure is genuinely effective, and is the least-intrusive measure available.

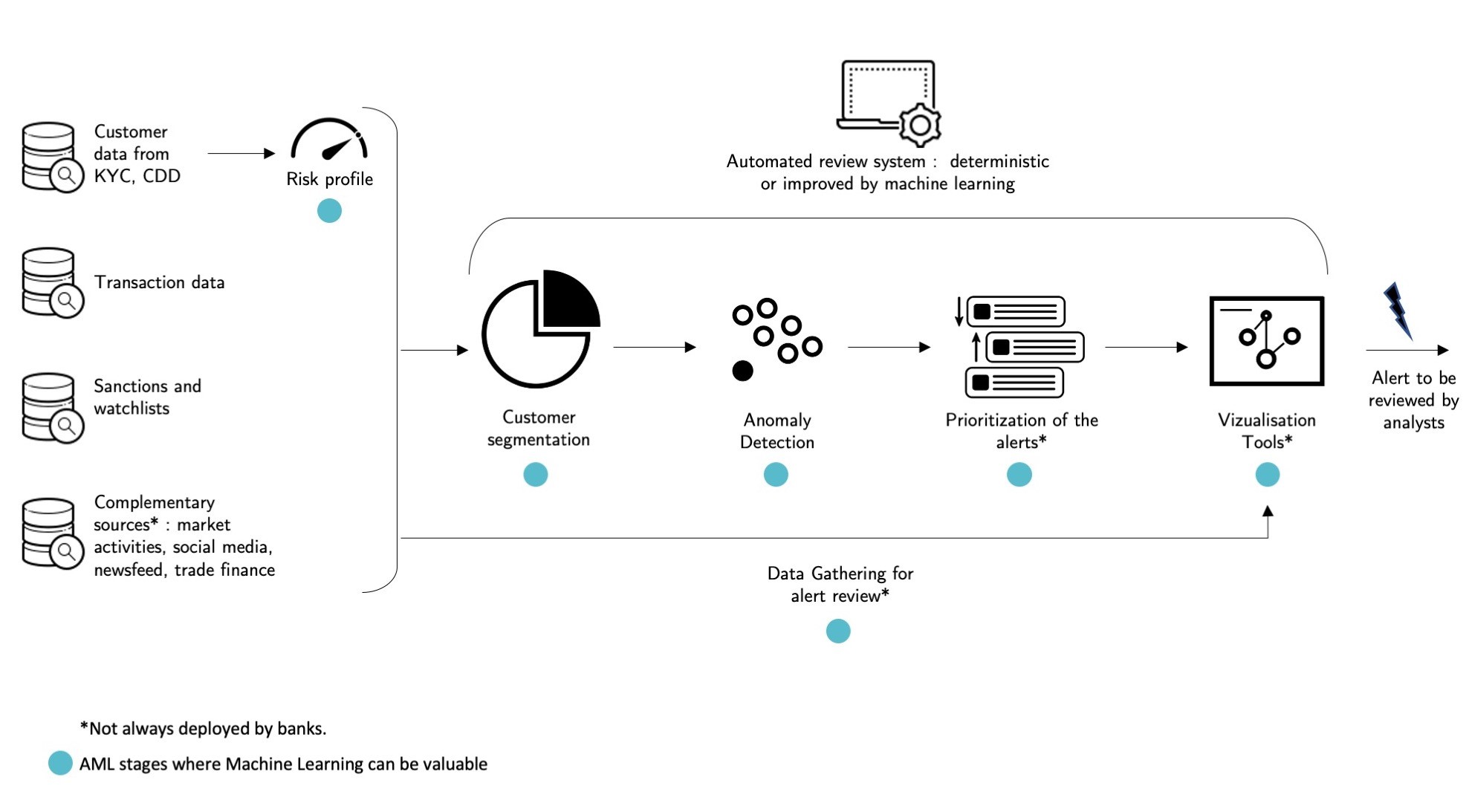

We illustrated the various proportionality steps by discussing three important CJEU cases, and one recent Netherlands case applying the proportionality test to algorithms used to detect social security fraud. We then examined how AML systems work, and how AI would change things. AI is an enhancement of current systems, so to achieve our fundamental rights assessment we need to consider the current system as well as the AI add-on features.

We then systematically applied each of the steps of the proportionality test to AML systems, both current rule-based AML systems and then to AI-enhanced systems. Our findings are that current AML systems fail the proportionality test in five respects. AI makes the failures more acute, but does not fundamentally change the reasons for the underlying problems. The one area where AI adds a new specific problem compared to current systems is algorithmic explainability.

The five problems we identified are as follows:

-

AML laws and regulations are not specific enough.

Proportionality requires that government-imposed technical measures that interfere with fundamental rights be “provided by law”, so as to ensure democratic transparency and accountability. The law or regulation must be sufficiently detailed so that banks and citizens understand the scope and purpose of the processing. A specific law is also a requirement for processing certain kinds of data under the GDPR (data relating to criminal offenses and sensitive data) and to ensure that banks do not go beyond what is strictly imposed by law, a practice known as gold plating. Current AML laws and regulations are vague and regulators have a tendency to expect ever-higher performance levels by banks based on evolving industry practices.

To cure this problem, AML regulations should describe the data that should be subject to review, and specific transaction monitoring practices that banks must implement. Certain sensitive aspects could be left for a confidential government ruling – a practice that is already applied in cybersecurity laws applicable to critical national infrastructure.

-

We don’t know what AML measures are really effective.

Because of the division of responsibility between banks and law enforcement authorities, banks do not get feedback on the actual utility of the suspicious activity reports they generate. Current data suggest that law enforcement authorities investigate only a small minority (10%-20%) of the reports sent by banks, meaning that a majority of the reports serve no law enforcement purpose. A privacy-intrusive profiling process that generates mostly useless reports is unlikely to pass the proportionality test, whether under the GDPR or under fundamental rights case law. The system cannot be shown to be “genuinely effective” and is unlikely to pass the “least intrusive means” test.

To cure this problem, a systematic feedback mechanism is necessary between banks and law enforcement authorities, so banks can focus on the most useful and effective reports, ones that actually yield a law enforcement result. Feedback will also be needed to train an AI-based system to look for the most important (from a law enforcement perspective) criminal patterns, and focus on them. Collecting data on suspicious activity “in case it might be useful” to police is not permitted under the proportionality test.

-

“General and indiscriminate” processing is disproportionate and illegal.

Analyzing all transaction data of all bank customers is illegal according to the European Court of Justice in the Digital Rights Ireland and Tele2 Sverige-Watson cases. There must be an objective link between the customer data being analyzed and the risk of criminal activity.

To cure this problem, banks must be able to show that the most intrusive profiling is conducted only for customers and transactions that have the highest criminal risk, and that less intrusive processing is applied to all customers, but only to establish risk profiles.

-

Transparency problems will be exacerbated by AI.

A recent court decision in the Netherlands on welfare fraud highlights the importance of transparency and explainability in the proportionality test. Individuals must be informed that they have been targeted by a suspicious activity report as soon as doing so will no longer compromise the criminal investigation. Individuals also need to understand how and why the report was generated in order to be able to challenge it, and to ensure that profiling and risk reports are not based on racial or ethnic bias.

To cure this problem, new algorithms should have built-in explanation features, and AML laws would have to be changed to ensure that banks inform all individuals targeted by a suspicious activity report within a certain time period, unless law enforcement authorities state that doing so would interfere with an investigation.

-

No regulator has 360° vision of the system.

Current data protection and banking regulators generally do not have a full vision of the AML system, including the parts controlled by law enforcement authorities. Proportionality requires an independent regulator to keep the entire system under surveillance to ensure that AML technical measures remain truly effective, and do not insidiously evolve toward ever-more intrusive monitoring techniques — a natural tendency in the law enforcement and national security field. The parallel here is strong with state surveillance measures for national security, where proportionality requires strict institutional oversight, including review and approval of new surveillance techniques.

To cure this problem, AML laws could grant a special role to data protection authorities, or to a separate commission, to scrutinize AML systems end-to-end to ensure they remain compliant with fundamental rights law.

_____________________________________________________________________

By Astrid Bertrand, Winston Maxwell and Xavier Vamparys, Télécom Paris – Institut Polytechnique de Paris